The Context Conundrum: What Happens Before Your LLM Even Sees Your Prompt

When people use an LLM, they often think the magic starts the moment they type a prompt.

But behind the scenes, there’s a ton of engineering that happens before the LLM even sees a word.

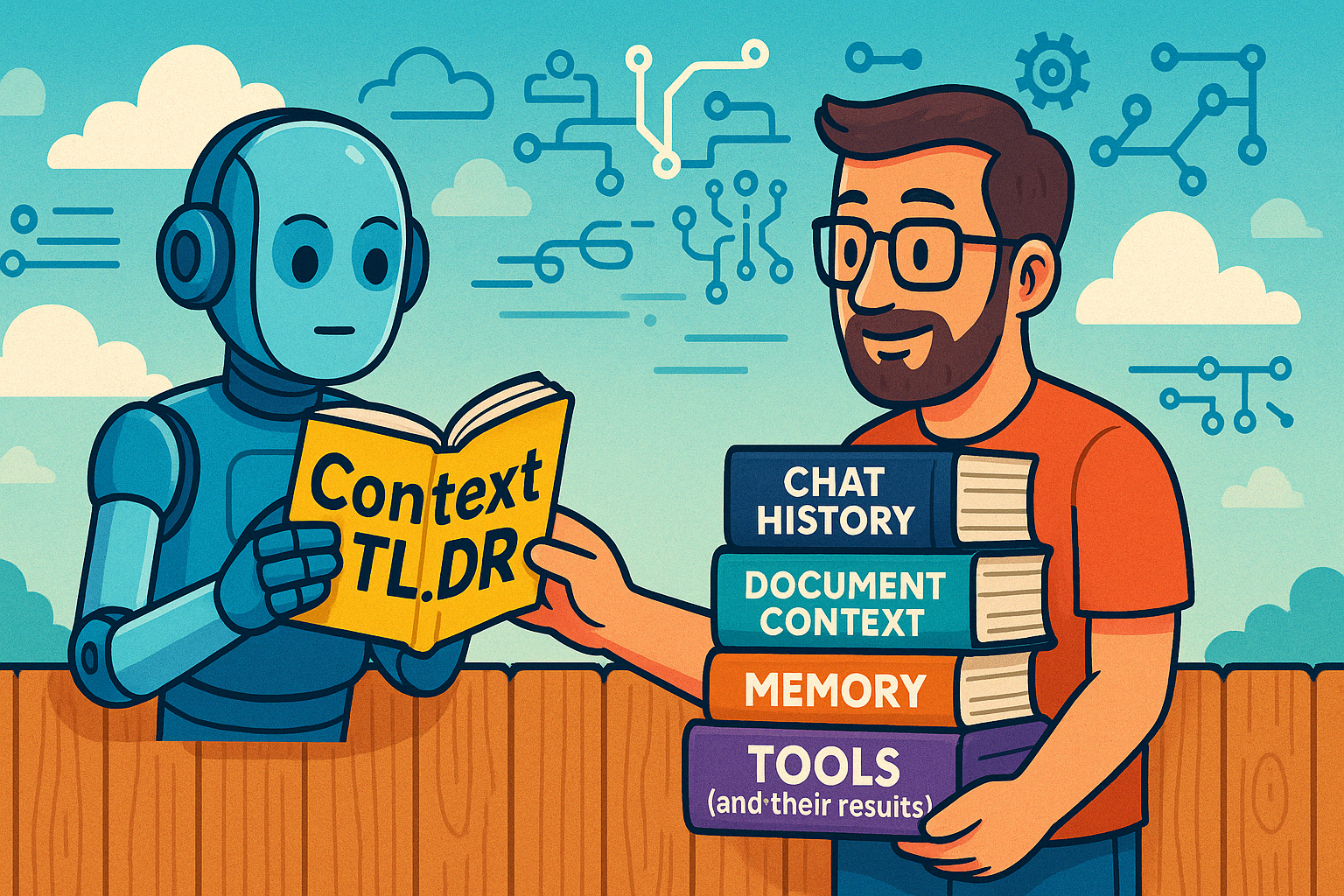

The real work? Gathering relevant context: past messages, document uploads, memory, tool outputs and compressing it all into a clean, digestible TL;DR.

The LLM isn’t doing everything. It’s reading a Context TL;DR that your app carefully put together.